When AI Slows You Down

- Juan Manuel Ortiz de Zarate

- Aug 2, 2025

- 11 min read

In recent years, the idea that artificial intelligence can significantly enhance productivity, particularly for software developers [2,3,4,7], has become an article of faith in both tech and economic circles. Proponents cite impressive benchmark results, thriving AI coding assistants like GitHub Copilot, and anecdotal productivity boosts from large language models. Yet a new randomized controlled trial conducted by METR (Model Evaluation & Threat Research) challenges this narrative head-on, revealing that for experienced developers working in real-world settings, AI may actually slow things down.

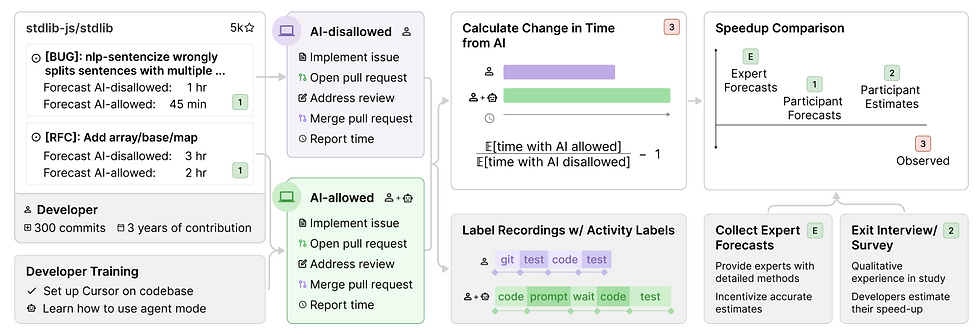

Titled “Measuring the Impact of Early-2025 AI on Experienced Open-Source Developer Productivity”[1], the study takes a rigorous, field-based approach to understanding AI’s real impact on productivity. Rather than rely on synthetic coding tasks or vague output metrics, the researchers embedded 16 senior developers in their own open-source repositories and measured the time they took to complete 246 genuine software development issues, with and without access to state-of-the-art AI tools such as Cursor Pro [5] and Claude 3.5/3.7 [6].

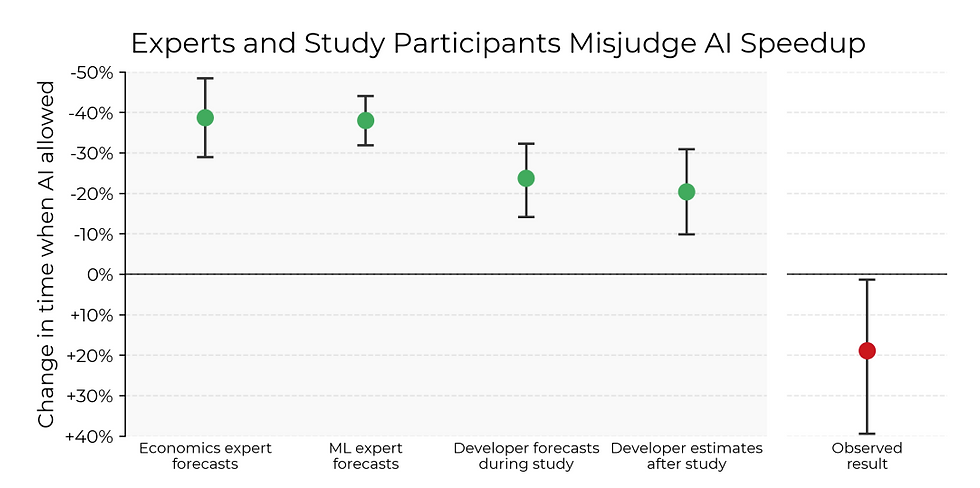

The findings are striking: when AI tools were allowed, developers took 19% longer to complete tasks on average.

The Method Behind the Surprise

At the heart of the study’s credibility lies its methodologically conservative and carefully structured design. Unlike prior work that relied on toy problems or output proxies, this research emphasizes ecological validity: developers worked on real issues, in real repositories, using workflows they themselves helped define.

Selection of Participants and Tasks

The participant pool consisted of 16 seasoned open-source developers, most of whom had over a decade of professional experience and deep familiarity with the specific repositories they worked on, averaging five years of active contribution and 1,500 commits per repository. This meant the study was not testing AI in isolation, but AI against humans operating near their peak efficiency in familiar technical environments.

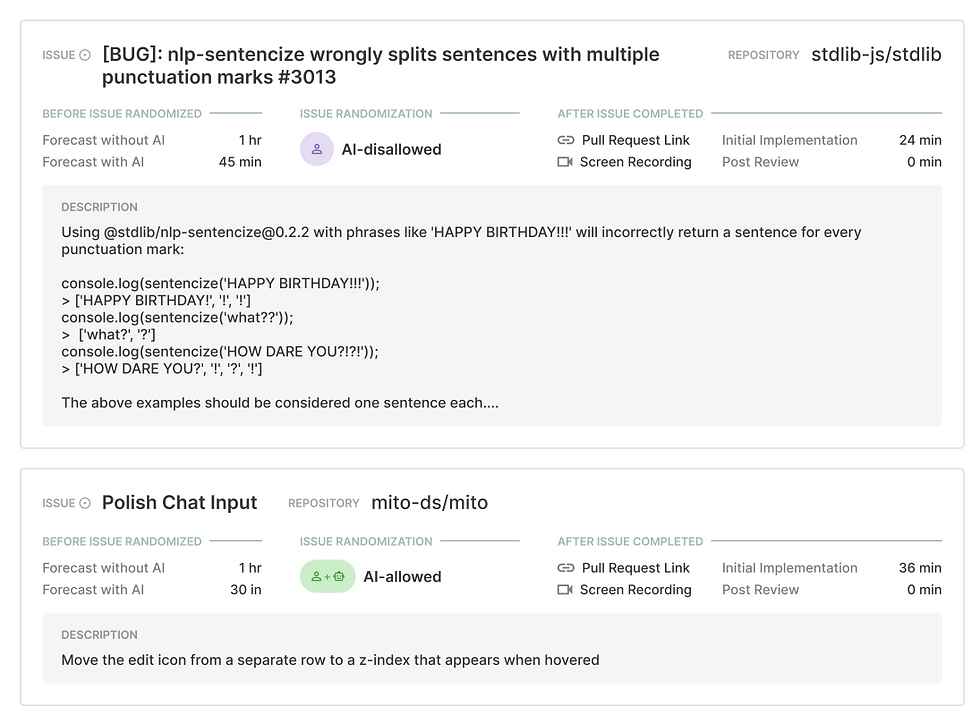

Developers were asked to submit a curated list of issues that reflected their current backlog. These were not artificially simplified or benchmarked tasks, they ranged from fixing subtle bugs to implementing new features and refactoring legacy code. Each issue had to be scoped to take no more than two hours, preserving feasibility while capturing meaningful complexity.

Randomized Assignment and Forecasting

Each task was independently randomized to one of two conditions: AI-allowed or AI-disallowed. This randomization happened after developers forecasted how long they expected each task to take under both conditions. These dual forecasts served two purposes: first, to estimate expected speedup on a per-task basis, and second, to act as a control for issue difficulty when interpreting results.

Because randomization occurred after forecasting, the study could isolate the effect of AI usage from potential biases in task selection or perceived difficulty. This precommitment to issue definitions ensured that developers weren’t choosing simpler tasks to pair with AI or avoiding complex ones.

Natural Workflow, Minimal Interference

Another key strength of the study is its minimal intrusion into developers’ natural working styles. Participants were not confined to specific development environments, though many opted to use Cursor Pro—a VSCode-based AI-augmented IDE provided by the researchers. Cursor integrates LLM-powered assistance into the editor, including tools for multi-file edits, command-line interaction, and iterative debugging.

Developers could also use other AI tools, such as web-based assistants, or refrain from AI altogether if they deemed it unhelpful. The point was not to prescribe usage, but to observe how AI affects productivity when developers are given freedom to integrate it as they would under normal conditions.

Importantly, all work, regardless of condition, was submitted through the same rigorous pull request processes used by the respective open-source communities. That meant peer review, adherence to contribution guidelines, and functional correctness all remained integral parts of the task completion pipeline.

Multimodal Data Collection

To gain insight beyond raw completion times, the study collected a rich multimodal dataset. Screen recordings captured developers’ real-time workflows, including interactions with terminals, editors, and browsers. These were manually annotated at 10-second resolution for fine-grained behavioral analysis.

In addition to video, developers completed self-reports for each task, detailing how much time was spent pre- and post-review, whether they used AI, and how they felt about the outcome. Exit interviews provided qualitative depth, shedding light on strategies, frustrations, and perceived AI value.

A subset of participants also had usage data collected from Cursor itself, allowing the researchers to measure how often developers accepted or rejected AI code completions, how many tokens were used per prompt, and which models were most relied upon.

Control for Confounding Variables

To mitigate noise and increase statistical power, the researchers used log-linear regression models incorporating developer-provided difficulty forecasts as covariates. This allowed them to account for heterogeneity in task complexity across conditions. Even when applying alternative estimators, trimming the data, or adjusting for dropout, the core result remained consistent: a statistically significant 19% increase in time when AI was used.

In other words, the slowdown wasn’t a fluke; it held under multiple analytic lenses, including naive time ratios, regression-adjusted comparisons, and robustness checks on imputation and randomization procedures.

A Deep Dive into Developer Behavior

Beyond timing metrics, the most illuminating insights from the study emerge through its behavioral analysis, an unprecedented look at how experienced developers actually interact with AI tools in the wild. Rather than relying solely on outcomes, the authors dissect the process of development, yielding a nuanced understanding of why AI might hinder more than help under certain conditions.

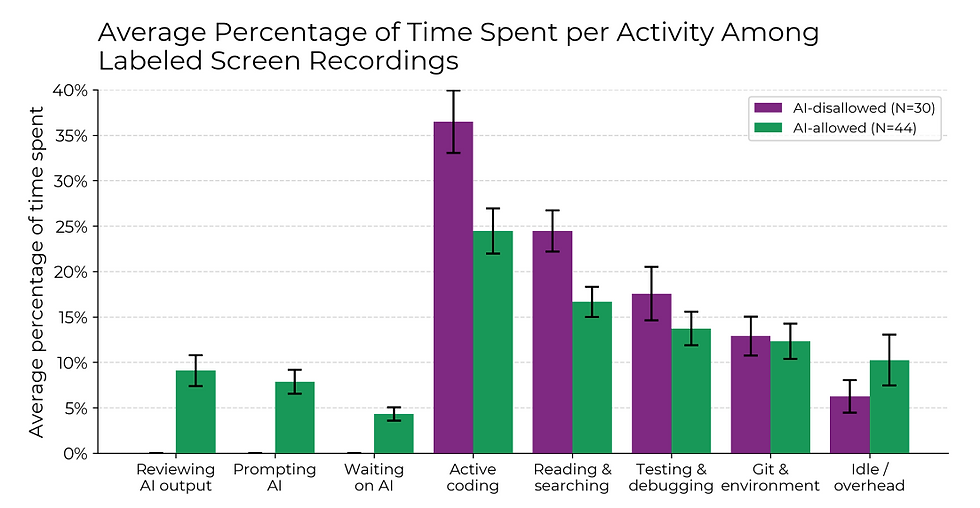

Fine-Grained Activity Labeling

The researchers manually annotated 74 screen recordings, totaling 84 hours of developer activity, using a taxonomy of 27 fine-grained behavior categories. These labels included not only obvious categories like “coding” or “debugging,” but also more specific ones such as “reviewing AI outputs,” “prompting AI,” “waiting on AI generation,” and “idle.” This enabled a granular comparison between AI-allowed and AI-disallowed workflows at roughly 10-second resolution.

The resulting profile is revealing. When AI is permitted, developers spend significantly less time actively writing code and less time searching for documentation or reference material. At first glance, this might suggest that AI is doing some of the heavy lifting, but that’s not the whole story.

In reality, the reduced coding and search time is offset by a redistribution of effort: developers now spend a substantial share of their time managing the AI itself. This includes crafting prompts, waiting for completions, reviewing suggestions, and, crucially, modifying or discarding them. Many tasks involved multiple cycles of querying, inspecting, and revising AI output before arriving at acceptable code. In effect, the cognitive burden shifts from problem-solving to interfacing with a semi-reliable collaborator.

The Cost of Mediation

The friction in these interactions is not merely theoretical. Developers often faced situations where AI-generated solutions were plausible but subtly incorrect, stylistically inconsistent, or ignorant of repository-specific conventions. This ambiguity forced developers into time-consuming review loops, where verifying AI output became as demanding as writing it manually, if not more so.

Even when developers accepted AI suggestions, they frequently treated them as drafts rather than definitive implementations. Exit interviews revealed a common theme: AI could often generate “something close,” but never quite right. For developers working in high-trust, high-rigor environments like open-source, this meant spending significant time polishing and correcting AI code to meet quality standards.

Moreover, the low acceptance rate, under 44% of AI-generated lines were ultimately used, implies a great deal of wasted effort. Developers regularly explored multiple prompts or strategies before giving up and reverting to manual coding. In some cases, AI outputs even introduced regressions or editing errors in unrelated parts of the codebase, which had to be diagnosed and undone.

Shifts in Cognitive Load

Interestingly, the study also found an increase in idle time when AI was available. This raises the possibility that interacting with AI introduces micro-frustrations or cognitive overhead that occasionally stalls workflow momentum. The pause between prompting an AI model and parsing its output may fragment attention or delay the formation of next steps, especially when multiple iterations are required.

Some developers reported that using AI was less effortful in a physical or emotional sense, but not faster. This distinction between ease and efficiency is important. The AI tools might reduce perceived difficulty, especially on unfamiliar problems, yet still extend total task time due to reliability issues and constant supervision.

Furthermore, not all developers interacted with AI in the same way. Usage patterns varied depending on prior experience with tools like Cursor, prompting strategies, and tolerance for AI “hallucinations.” Despite this variation, the aggregate result remained consistent: time spent managing AI interactions outweighed time saved in coding or searching.

From Developer to Orchestrator

One of the most compelling behavioral insights is the role shift induced by AI tools. Developers no longer act solely as implementers. Instead, they become orchestrators, tasking the AI with actions, evaluating its proposals, revising its output, and often reverting to manual intervention when needed. This role demands a different skillset: not just technical expertise, but also prompt engineering, tool fluency, and a critical eye for partial solutions.

In high-context environments, where understanding subtle constraints, architectural decisions, and historical reasoning matters, this shift can be more of a hindrance than a help. Developers must constantly compensate for what the AI doesn’t know: implicit constraints, undocumented edge cases, or stylistic expectations that aren't encoded in training data.

This orchestration overhead, while manageable in small doses, accumulates over time. As the study shows, it may turn into a net drag on productivity, particularly for developers who already possess the tacit knowledge that AI lacks.

Explaining the Slowdown: Five Key Factors

How can cutting-edge AI, equipped with models like Claude 3.7 and deployed in powerful development environments, result in slower, not faster, performance for experienced developers? To unpack this, the researchers went beyond descriptive metrics and conducted a structured analysis of 21 possible explanatory factors. These were grouped into four thematic categories: direct productivity losses, experimental artifacts, conditions that elevate human performance, and constraints that diminish AI performance.

Through developer surveys, exit interviews, screen recordings, and subset analyses, five factors emerged as credible contributors to the observed slowdown. Importantly, these are not quirks of experimental design, but systemic characteristics of the developer-AI interaction in this specific context.

1. Over-Optimism About AI Usefulness

Perhaps the most human factor of all: misplaced confidence. Before starting each task, developers predicted that using AI would cut completion time by 24%. After completing all tasks, they still believed AI had delivered a 20% improvement, even though measured results showed a 19% slowdown.

This cognitive dissonance illustrates a psychological trap: when developers trust AI to help, they are more inclined to persist with it, even when its output is mediocre or counterproductive. This belief can lead to inefficient behavior, repeated prompting, extended tinkering with AI-suggested code, or deferring to AI when manual work might have been quicker.

The implication is profound: productivity loss isn’t always a function of poor AI output; it can stem from developers misjudging how and when to engage with AI in the first place.

2. High Developer Familiarity with the Codebase

In contrast to prior studies that recruited novices or generalists, this experiment involved domain experts working in repositories they knew intimately. On average, each developer had contributed for five years, covering more than half the repository’s lifespan, and authored thousands of commits.

That level of embedded knowledge fundamentally alters the cost-benefit calculus of AI assistance. Tasks that a developer could perform “from muscle memory” suddenly become slower when they involve steering, interpreting, or correcting an AI assistant. In this case, the AI doesn’t augment the developer; it gets in the way.

This effect is exacerbated when the AI fails to grasp local conventions, long-term design choices, or historical rationales that are second nature to the human expert. Developers themselves reported that AI was far more useful in areas where they lacked prior exposure or needed to explore unfamiliar APIs, not on bread-and-butter tasks in code they had authored or maintained for years.

3. Repository Size and Complexity

The study repositories were no small side projects—they averaged over 1.1 million lines of code, had thousands of forks, and enforced stringent contribution standards. In such environments, even a small bug fix or feature implementation requires navigating intricate architectural dependencies and adhering to community-specific norms.

This setting presents a substantial challenge for current AI systems. LLMs may excel in isolated coding tasks, but they struggle to reason over sprawling, interdependent codebases, especially when long-range dependencies and context-specific conventions aren’t explicitly surfaced in the prompt or recent context window.

In effect, the scale and maturity of these repositories impose cognitive demands that human developers are uniquely equipped to handle, especially those who helped build them. AI tools, despite being trained on large corpora, lack this deep internalization of project-specific logic.

4. Low AI Reliability and the Hidden Cost of Cleanup

A less glamorous, but highly consequential, observation was that AI outputs were often wrong, incomplete, or stylistically inconsistent. On average, developers only accepted 44% of the code lines suggested by AI. And even those accepted lines typically required revision to meet project standards.

Far from being a passive time sink, this imposed a constant cost: reviewing, debugging, and reworking AI-generated code became a major part of the workflow. According to screen recordings, developers spent approximately 9% of their time in this validation and cleanup loop. That’s nearly one-tenth of their total task time dedicated not to solving the problem, but to quality-controlling their automated collaborator.

What’s more, these failures weren’t always obvious. Some developers discovered subtle mistakes hours into the task, while others noticed AI making spurious changes in unrelated files, leading to time-consuming reversions or re-implementations. This unreliability eroded trust and efficiency simultaneously.

5. Implicit Repository Context and the Limits of General AI

While LLMs have made impressive strides in code completion and snippet generation, they remain weak at inferring what isn’t said. In large-scale software projects, much of what matters is unstated: implicit architectural constraints, unwritten style rules, undocumented exceptions, and team-specific preferences. Human developers internalize this context over time; AI models do not.

Multiple participants noted that AI struggled to “think like a contributor” to the repository. It might insert code in the wrong location, ignore backwards compatibility requirements, or misunderstand subtle interactions between modules. Some developers likened the AI to a new team member with no onboarding—competent in abstract, but lost in practice.

This inability to access and act on tacit knowledge is a core limitation of today’s general-purpose AI systems. Unless explicitly fine-tuned or scaffolded with additional tools, they remain ill-equipped to handle the deeply contextual reasoning that expert-level software development often demands.

Together, these five factors don’t just explain why AI slows developers down, they outline a theory of when and where AI is likely to be counterproductive. Namely, in high-context, high-complexity environments where human expertise already fills in the gaps that AI can’t yet reach.

Generalization and Implications

Importantly, the authors are cautious not to overgeneralize their findings. The slowdown occurred in a highly specific context: experienced developers, working on large, complex repositories, often on tasks they were already deeply familiar with.

In other domains, such as greenfield development, junior-level tasks, or unfamiliar codebases, AI tools may still offer substantial benefits. Indeed, prior work such as Peng et al. (2023) and Paradis et al. (2024) found 21–56% speedups using AI on more synthetic or simplified tasks.

Still, this study exposes the fragility of assumptions underpinning AI deployment at scale. It also highlights the importance of field experiments with robust outcome measures. Relying on metrics like the number of commits or lines of code, as in some past studies, may be misleading. Verbose code is not necessarily productive code.

References

[1] Becker, J., Rush, N., Barnes, E., & Rein, D. (2025). Measuring the Impact of Early-2025 AI on Experienced Open-Source Developer Productivity. arXiv preprint arXiv:2507.09089.

[2] Doron Yeverechyahu, Raveesh Mayya, and Gal Oestreicher-Singer. The impact of large language models on open-source innovation: Evidence from github copilot, 2025.

[3] Zheyuan Cui, Mert Demirer, Sonia Jaffe, Leon Musolff, Sida Peng, and Tobias Salz. The effects of generative ai on high-skilled work: Evidence from three field experiments with software developers, June 2025.

[4] Thomas Weber, Maximilian Brandmaier, Albrecht Schmidt, and Sven Mayer. Significant productivity gains through programming with large language models. Proc. ACM Hum.-Comput.Interact., 8(EICS), June 2024. doi: 10.1145/3661145.

[5] The AI Code Editor, Cursor

[6] Claude 3.5 Sonnet, Anthropic

[7] Sida Peng, Eirini Kalliamvakou, Peter Cihon, and Mert Demirer. The impact of ai on developer productivity: Evidence from github copilot, 2023.

Comments